Rust under the hood: the jemalloc Memory Allocation algorithm

Introduction to jemalloc

Introduction to jemalloc

jemalloc stands for “Jason Evans’ malloc,” named after its primary developer. It was initially designed for FreeBSD to solve fragmentation issues, but many software systems, including the Rust programming language, Facebook, and the Firefox web browser, now use it.

jemalloc’s design is unique in its approach to balancing memory usage efficiency, fragmentation reduction, scalability across many threads and CPUs, and computational efficiency.

Memory Segmentation in jemalloc

jemalloc segments memory into several distinct regions: chunks, runs, and regions. This division helps optimize allocations for different allocation size classes.

- Chunks: Chunks are large, typically contiguous, regions of memory that jemalloc obtains directly from the operating system via system calls like

mmaporsbrk. The default size of a chunk is 4MB on 64-bit systems, but this size can be adjusted via the API or during compile time. - Runs: Within each chunk, jemalloc further subdivides memory into runs. A run is a contiguous region of memory dedicated to a specific size class.

- Regions: Regions are the actual segments of memory returned by an allocation request (via

malloc,calloc,realloc, etc.). Multiple regions exist within a run, all of the same size class.

Size Classes and Allocation

jemalloc provides different allocation strategies based on the requested allocation size. jemalloc’s size classes are divided into three categories:

- Tiny size class: Sizes from 2 to 64 bytes are considered ‘tiny’ and are allocated in groups.

- Small size class: Sizes from 64 bytes to 2–4 Kilobytes (depending on the system) are categorized as ‘small’.

- Large size class: Sizes above the small size class threshold up to the chunk size fall under the ‘large’ size class.

- Huge size class: If the allocation request is larger than a chunk size, it falls under the ‘huge’ size class, and each huge allocation is managed independently, backed by one or more chunks.

This multi-tiered approach allows for significant efficiencies in both time and space, reducing fragmentation and allocation/deallocation time overhead.

Thread Caching and Arena

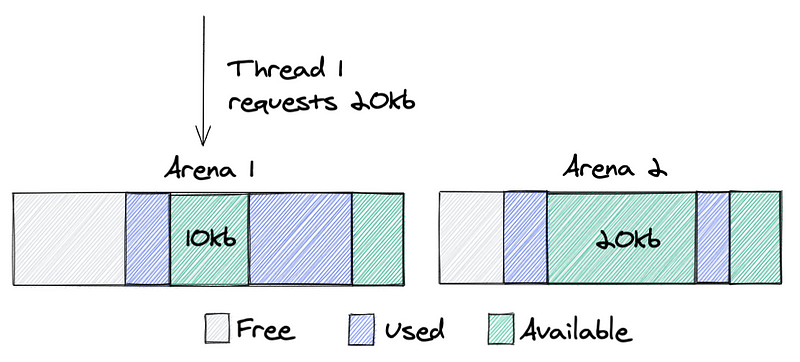

jemalloc employs thread-local caching and an arena-based allocation strategy for concurrency and to reduce lock contention.

- Thread-local caching (tcache): To avoid lock contention among multiple threads, jemalloc utilizes a thread-local cache (tcache) that stores a small number of objects from each size class.

- Arenas: jemalloc uses a concept of arenas for actual memory allocations. Each arena maintains a set of runs, and each run serves a particular size class. Multiple threads can use multiple arenas, thereby reducing lock contention.

Dealing with Fragmentation

jemalloc employs several techniques to combat fragmentation:

- Delayed coalescing: jemalloc does not immediately merge freed memory with adjoining free memory regions. This approach reduces fragmentation caused by alternating sequences of allocations and deallocations.

- Size class spacing: jemalloc uses a sophisticated calculation to determine size classes, which helps reduce internal fragmentation.

- Chunk recycling: jemalloc maintains a pool of chunks that can be reused for future allocations, reducing the need to request more memory from the system and thereby reducing fragmentation at the system level.

Customization and Extensibility in jemalloc

jemalloc is highly customizable and can be tuned for specific application needs. It exposes a rich set of options via mallctl() function, which allows the application to control thread caching, arena management, chunk allocation, and other internal parameters. jemalloc also supports custom chunk allocators, giving the user control over how memory is sourced from the system.

Moreover, jemalloc offers various statistics and profiling tools, enabling developers to understand and optimize memory usage in their applications. With heap profiling, developers can sample memory allocations to identify memory-intensive parts of their code.

Memory Usage Efficiency

One of the critical benefits of jemalloc is its efficient memory usage. By managing memory in chunks, runs, and regions, and using size classes for allocations, jemalloc ensures that memory is well-utilized. Its sophisticated approach to determining size classes ensures that allocated memory closely matches the request, minimizing wasted space. Combining these strategies leads to superior memory efficiency, making jemalloc a good choice for applications needing to manage memory resources carefully.

Scalability and Performance

jemalloc’s design makes it highly scalable across many cores and threads, particularly important for multicore and multithreaded applications. Using thread-local caches (tcaches) and arenas minimizes the need for synchronization, allowing threads to allocate and deallocate memory without waiting for locks. This design results in constant-time operations and helps avoid contention, a common performance bottleneck in multithreaded applications.

Check out more articles about Rust in my Rust Programming Library!

Final Thoughts

jemalloc is a powerful and flexible memory allocation library that offers several advantages over traditional allocators, including improved memory efficiency, reduced fragmentation, and better scalability in multithreaded applications. While it may be more complex to understand and tune, its benefits make it a worthwhile investment for many applications. As with any tool, it’s crucial to understand your application’s needs and behaviour to make the best use of jemalloc. Understanding how jemalloc works can help you write better, more efficient software and make more informed decisions about memory management in your applications.

Stay tuned, and happy coding!

Check out more articles about Rust in my Rust Programming Library!

Visit my Blog for more articles, news, and software engineering stuff!

Follow me on Medium, LinkedIn, and Twitter.

Check out my most recent book — Application Security: A Quick Reference to the Building Blocks of Secure Software.

All the best,

Luis Soares

CTO | Head of Engineering | Blockchain Engineer | Solidity | Rust | Smart Contracts | Web3 | Cyber Security

#rust #programming #language #memory #allocation #traits #abstract #smartcontracts #web3 #security #privacy #confidentiality #cryptography #softwareengineering #softwaredevelopment #coding #software